How to backup firebase realtime database

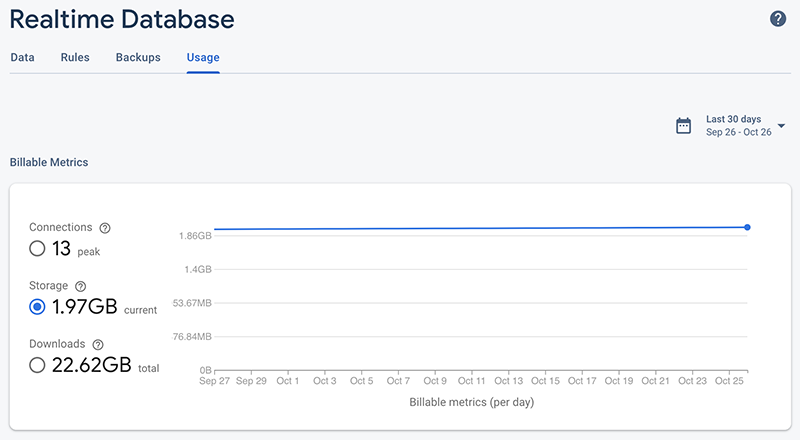

One object in one of my Firebase Realtime Database projects contains 855932 keys (total json size is 1.6GB) and growing daily.

Firebase has a payload limit of 256MB (see all limits).

This means I cannot download the object using the customary REST API out of the box.

I was locked out of my own data for backup purposes.

If this wasn't bad enough, when you try, the PAYLOAD_TOO_LARGE error causes firebase realtime database client to freeze / never return or resolve a promise.

Firebase announced automated daily backups back in 2016.

Sounds great but my issues are:

- I don't need a daily backup

- Firebase only offers an option to have backups older than 30 days automatically purged.

- I do not want to create a whole environment (Google Cloud SDK) to simply download from GCS.

Being able to make your own backups also means you get to decide which backups to keep (for example weekly for last 30 days then only month for past year).

Here is the script I made for myself to handle this situation.

Written in PHP but the process is pretty straightforward if you want to make your own implementation.

Process: retrieve all keys names using shallow method then batch queries in chunks that do not surpress the 256MB payload limit.

To start creating backups, save the script, customize to your project and run from terminal.

For convenience, the script assumes the database is public (ie no authentication).

Also, remember that although you will be saving on storage costs, you will incur bandwidth fees depending on your plan.

Enjoy!

<?php

ini_set('memory_limit','2G');

ini_set('display_errors', 1);

ini_set('display_startup_errors', 1);

error_reporting(E_ALL);

$time_start = microtime(true);

//START CONFIGURATION

$force=true; //overwrite if backup already exists

$useDynamicQuerySize=true; //check random element to pick the ideal query size

$showProgress=true;

$prop="users"; //the object to backup

$backupOrigin=""; //root location of your database ie https://<PROJECT NAME>.firebaseio.com/

//END CONFIGURATION

if($backupOrigin==""){

die("Please provide your databaseURL.\n");

}

$i=0;

$keys=[];

$pgsize=1000;

$backupOrigin.=$prop;

$backupFilename="FirebaseBackup-$prop".date("Y-m-d").".json";

if(file_exists($backupFilename)){

if($force){unlink($backupFilename);}

else{die("$backupFilename already exists.\n");}

}

$cURL = curl_init();

curl_setopt($cURL, CURLOPT_FOLLOWLOCATION, 1);

curl_setopt($cURL, CURLOPT_TIMEOUT, 30);

curl_setopt($cURL, CURLOPT_RETURNTRANSFER, true);

getDatabaseKeys();

if($useDynamicQuerySize){

checkRandomKeySize();

}

echo "\nWill retrieve data...\n";

$backupFile = fopen($backupFilename, "a") or die("Unable to open file!");

fwrite($backupFile, "{\n");

do {

getData();

} while( $i < $keyCount-1 );

fwrite($backupFile, "}");

fclose($backupFile);

$backupSize=filesize($backupFilename)/1000000;

if($backupSize>1000){

$backupSize=round($backupSize/1000,2)."GB";

}

else {

$backupSize=round($backupSize)."MB";

}

$duration=round(microtime(true)-$time_start);

if($duration>120){

$duration=intval($duration/60)."min".($duration%60)."s";

}

else{

$duration=$duration."s";

}

echo "\n\nBackup complete.\nKeys: $keyCount\nBackup Size: $backupSize\nDuration: $duration\n\n";

function getData(){

global $cURL,$backupOrigin,$backupFile,$i,$keys,$pgsize,$keyCount,$showProgress;

$a=$keys[$i];

$i=min($keyCount-1,$i+$pgsize);

$b=$keys[$i];

$url=$backupOrigin.'.json?orderBy=%22$key%22&startAt=%22'.$a.'%22&endAt=%22'.$b.'%22';

curl_setopt($cURL, CURLOPT_URL, $url);

$r = curl_exec($cURL);

$curlTime=round(curl_getinfo($cURL, CURLINFO_TOTAL_TIME),1)."s";

$curlSize=round(curl_getinfo($cURL, CURLINFO_SIZE_DOWNLOAD_T)/1000000)."MB";

if(!$r){

echo curl_error($cURL)."\n";

die;

}

$r=json_decode($r,true);

if(isset($r['error'])){

print_r($r);

die;

}

foreach($r as $k=>$v){

$o=[];

$o[$k]=$v;

fwrite($backupFile, json_encode($o).",\n");

}

if($showProgress){

echo "\r".round(($i+1)/$keyCount*100)."% (".($i+1)."/$keyCount Last query: $curlTime $curlSize)";

}

}

function checkRandomKeySize(){

global $keys,$backupOrigin,$pgsize,$cURL;

echo "\nWill check random key size... ";

$curlSize=0;

for($a=0;$a<3;$a++){

$i=$keys[array_rand($keys)];

$url="$backupOrigin/$i.json";

curl_setopt($cURL, CURLOPT_URL, $url);

$r = curl_exec($cURL);

$curlSize+=curl_getinfo($cURL, CURLINFO_SIZE_DOWNLOAD_T);

}

$pgsize=floor(100000000/$curlSize*3); //100MB per query, limit is 256MB

echo "done (will set $pgsize keys per query).";

}

function getDatabaseKeys(){

global $cURL,$backupOrigin,$keys,$keyCount;

echo "Retrieving databases keys... ";

$url="$backupOrigin.json?shallow=true";

curl_setopt($cURL, CURLOPT_URL, $url);

$keys = curl_exec($cURL);

if(!$keys){

die("\nError retrieving keys.\n".curl_error($cURL)."\n");

}

$keys=json_decode($keys,true);

$keys=array_keys($keys);

sort($keys);

$keyCount=count($keys);

echo "done ($keyCount keys).";

}

?>

Published: Wed, Oct 26 2022 @ 19:46:12

Back to Blog